Difference between revisions of "stoney conductor: VM Backup"

| [unchecked revision] | [unchecked revision] |

(→State of the art) |

(→State of the art) |

||

| Line 163: | Line 163: | ||

* We can now combine these two existing scripts and create a wrapper (lets call it KVMBackup) which, in some way, adds some logic to the BackupKVMWrapper.pl. In fact the KVMBackup wrapper will generate the list of machines which need a backup. | * We can now combine these two existing scripts and create a wrapper (lets call it KVMBackup) which, in some way, adds some logic to the BackupKVMWrapper.pl. In fact the KVMBackup wrapper will generate the list of machines which need a backup. | ||

| − | The behaviour on our servers is as follows: | + | The behaviour on our servers is as follows (c.f. Figure 2): |

| − | # | + | # The (decentralized) KVMBackup wrapper generates a list off all machines running on the current host. |

| + | #* For each of these machines: | ||

| + | #** Check if the machine is excluded from the backup, if yes, remove the machine from the list | ||

| + | #** Check if the last backup was successful, if not, remove the machine from the list | ||

| + | # Update the backup subtree for each machine in the list | ||

| + | #* Remove the old backup leaf (the "yesterday-leaf"), and add a new one (the "today-leaf") | ||

| + | #* After this step, the machines are ready to be backed up | ||

| + | # Call the BackupKVMWrapper.pl script with the machines list as a parameter | ||

| + | # Wait for the BackupKVMWrapper.pl script to finish | ||

| + | # Go again through all machines and update the backup subtree a last time | ||

| + | #* Check if the backup was successful, if yes, set sstProvisioningMode = finished (see also TBD) | ||

| + | |||

| + | [[File:wrapper-interaction.png|500px|thumbnail|none|Figure 2: How the two wrapper interact with the LDAP backend]] | ||

= Next steps = | = Next steps = | ||

[[Category: stoney conductor]] | [[Category: stoney conductor]] | ||

Revision as of 12:56, 23 October 2013

Contents

Overview

This page describes how the VMs and VM-Templates are backed-up inside the stoney cloud.

Basic idea

The main idea to backup a VM or a VM-Template is, to divide the task into three subtasks:

- Snapshot: Save the machines state (CPU, Memory and Disk)

- Merge: Merge the Disk-Image-Snapshot with the Live-Image

- Retain: Export the snapshot files

A more detailed and technical description for these three sub-processes can be found here.

Furthermore there is an control instance, which can independently call these three sub-processes for a given machine. Like that, the stoney cloud is able to handle different cases:

Backup a single machine

The procedure for backing up a single machine is very simple. Just call the three sub-processes (snapshot, merge and retain) one after the other. So the control instance would do some very basic stuff:

object machine = args[0]; if( snapshot( machine ) ) { if ( merge( machine ) ) { if ( retain( machine ) ) { printf("Successfully backed up machine %s\n", machine); } else { printf("Error while retaining machine %s: %s\n", machine, error); } } else { printf("Error while merging machine %s: %s\n", machine, error); } } else { printf("Error while snapshotting machine %s: %s\n", machine, error); }

Backup multiple machines at the same time

When backing up multiple machines at the same time, we need to make sure that the downtime for the machines are as close together as possible. Therefore the control instance should call first the snapshot process for all machines. After every machine has been snapshotted, the control instance can call the merge and retain process for every machine. The most important part here is, that the control instance somehow remembers, if the snapshot for a given machine was successful or not. Because if the snapshot failed, it must not call the merge and retain process. So the control instance needs a little bit more logic:

object machines[] = args[0]; object successful_snapshots[]; # Snapshot all machines for( int i = 0; i < sizeof(machines) / sizeof(object) ; i++ ) { # If the snapshot was successful, put the machine into the # successful_snapshots array if ( snapshot( machines[i] ) ) { successful_snapshots[machines[i]]; } else { printf("Error while snapshotting machine %s: %s\n", machines[i],error); } } # Merge and reatin all successful_snapshot machines for ( int i = 0; i < sizeof(successful_snapshots) / sizeof(object) ; i++ ) ) { # Check if the element at this position is not null, then the snapshot # for this machine was successful if ( successful_snapshots[i] ) { if ( merge( successful_snapshots[i] ) ) { if ( retain( successful_snapshots[i] ) ) { printf("Successfully backed-up machine %s\n", successful_snapshots[i]); } else { printf("Error while retaining machine %s: %s\n", successful_snapshots[i],error); } } else { printf("Error while merging machine %s: %s\n", successful_snapshots[i],error); } } }

Sub-Processes

Snapshot

- Create a snapshot with state:

- If the VM

vm-001is running:- Save the state of VM

vm-001to the filevm-001.state(This file can either be created on a RAM-Disk or directly in the retain location. This example however saves the file to a RAM-Disk):virsh save vm-001 /path/to/ram-disk/vm-001.state

- After this command, the VMs CPU and memory state is represented by the file

/path/to/ram-disk/vm-001.stateand the VMvm-001is shut down.

- Save the state of VM

- If the VM

vm-001is shut down:- Create a fake state file for the VM:

echo "Machine is not runnung, no state file" > /path/to/ram-disk/vm-001.state

- Create a fake state file for the VM:

- If the VM

- Move the disk image

/path/to/images/vm-001.qcow2to the retain location:mv /path/to/images/vm-001.qcow2 /path/to/retain/vm-001.qcow2

- Please note: The retain directory (

/path/to/retain/) has to be on the same partition as the images directory (/path/to/images/). This will make themvoperation very fast (only renaming the inode). So the downtime (remember the VMvm-001is shut down) is as short as possible. - Please note2: If the VM

vm-001has more than just one disk-image, repeat this step for every disk-image

- Please note: The retain directory (

- Create the new (empty) disk image with the old as backing store file:

qemu-img create -f qcow2 -b /path/to/retain/vm-001.qcow2 /path/to/images/vm-001.qcow2

- Please note: If the VM

vm-001has more than just one disk-image, repeat this step for every disk-image

- Please note: If the VM

- Set correct ownership and permission to the newly created image:

-

chmod 660 /path/to/images/vm-001.qcow2

-

chown root:vm-storage /path/to/images/vm-001.qcow2

- Please note: If the VM

vm-001has more than just one disk-image, repeat these steps for every disk-image

-

- Save the VMs XML description

- Save the current XML description of VM

vm-001to a file at the retain location:virsh dumpxml vm-001 > /path/to/retain/vm-001.xml

- Save the current XML description of VM

- Save the backend entry

- There is no generic command to save the backend entry (since the command depends on the backend). Important here is, that the backend entry of the VM

vm-001is saved to the retain location:/path/to/retain/vm-001.backend

- There is no generic command to save the backend entry (since the command depends on the backend). Important here is, that the backend entry of the VM

- Restore the VMs

vm-001from its saved state (this will also start the VM):virsh restore /path/to/ram-disk/vm-001.state

- Please note: After this operation the VM

vm-001is running again (continues where we stopped it), and we have a consistent backup for the VMvm-001:- The file

/path/to/ram-disk/vm-001.statecontains the CPU and memory state of VMvm-001at time T1 - The file

/path/to/retain/vm-001.qcow2contains the disk state of VMvm-001at time T1- Important: Remember: The live-disk-image

/path/to/images/vm-001.qcow2still contains a reference to this file!! So you cannot delete or move it!!!

- Important: Remember: The live-disk-image

- The file

/path/to/retain/vm-001.xmlcontains the XML description of VMvm-001at time T1 - The file

/path/to/retain/vm-001.backendcontains the backend entry of VMvm-001at time T1

- The file

- Please note: After this operation the VM

- Move the state file from the RAM-Disk to the retain location (if you used the RAM-Disk to save the VMs state)

-

mv /path/to/ram-disk/vm-001.state /path/to/retain/vm-001.state

-

See also: Snapshot workflow

Merge

- Check if the VM

vm-001is running- If not, start the VM in paused state:

virsh start --paused vm-001

- If not, start the VM in paused state:

- Merge the live-disk-image (

/path/to/images/vm-001.qcow2) with its backing store file (/path/to/retain/vm-001.qcow2):virsh qemu-monitor-command vm-001 --hmp "block_stream drive-virtio-disk0"

- Please note: If a VM has more than just one disk-image, repeat this step for every image. Just increase the number at the end of the command. So command to merge the second disk image would be:

virsh qemu-monitor-command vm-001 --hmp "block_stream drive-virtio-disk1"

- Please note: If a VM has more than just one disk-image, repeat this step for every image. Just increase the number at the end of the command. So command to merge the second disk image would be:

- If the machine is running in paused state (means we started it in 1. because it was not running), stop it again:

-

virsh shutdown vm-001

-

Please note: After these steps, the live-disk-image /path/to/image/vm-001.qcow2 no longer contains a reference to the image at the retain location (/path/to/retain/vm-001.qcow2). This is important for the retain process.

See also: Merge workflow

Retain

- Move the all the files in from the retain directory (

/path/to/retain/) to the backup directory (/path/to/backup/)- Move the VMs state file to the backup directory

-

mv /path/to/retain/vm-001.state /path/to/backup/vm-001.state

-

- Move the VMs disk image to the backup directory

-

mv /path/to/retain/vm-001.qcow2 /path/to/backup/vm-001.qcow2

- Please note: If the VM

vm-001has more than just one disk image, repeat this step for each disk image

- Please note: If the VM

-

- Move the VMs XML description file to the backup directory

-

mv /path/to/retain/vm-001.xml /path/to/backup/vm-001.xml

-

- Move the VMs backend entry file to the backup directory

-

mv /path/to/retain/vm-001.backend /path/to/backup/vm-001.backend

-

- Move the VMs state file to the backup directory

See also Retain workflow

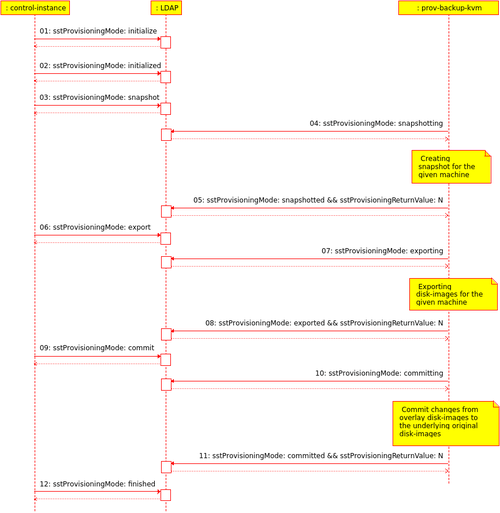

Communication through backend

Since the stoney cloud is (as the name says already) a cloud solution, it makes sense to have a backend (in our case openLDAP) involved in the whole process. Like that it is possible to run the backup jobs decentralized on every vm-node. The control instance can then modify the backend, and theses changes are seen by the diffenrent backup daemons on the vm-nodes. So the communication could look like shown in the following picture (Figure 1):

State of the art

Since we do not have a working control instance, we need to have a workaround for backing up the machines:

- We do already have a BackupKVMWrapper.pl script (File-Backend) which executes the three sub-processes in the correct order for a given list of machines (see #Backup multiple machines at the same_time).

- We do already have the implementation for the whole backup with the LDAP-Backend (see stoney conductor: prov backup kvm ).

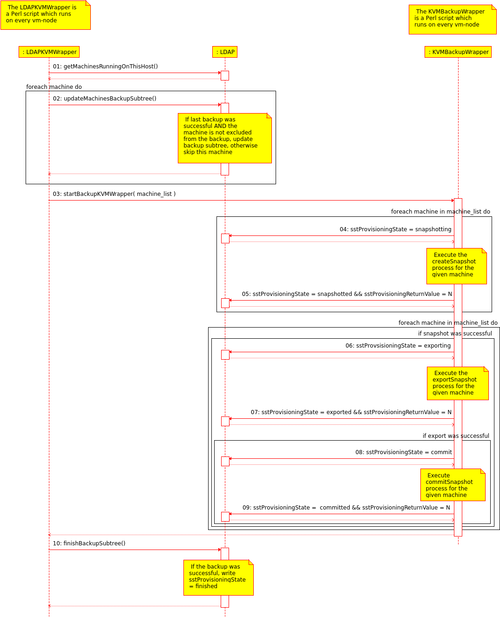

- We can now combine these two existing scripts and create a wrapper (lets call it KVMBackup) which, in some way, adds some logic to the BackupKVMWrapper.pl. In fact the KVMBackup wrapper will generate the list of machines which need a backup.

The behaviour on our servers is as follows (c.f. Figure 2):

- The (decentralized) KVMBackup wrapper generates a list off all machines running on the current host.

- For each of these machines:

- Check if the machine is excluded from the backup, if yes, remove the machine from the list

- Check if the last backup was successful, if not, remove the machine from the list

- For each of these machines:

- Update the backup subtree for each machine in the list

- Remove the old backup leaf (the "yesterday-leaf"), and add a new one (the "today-leaf")

- After this step, the machines are ready to be backed up

- Call the BackupKVMWrapper.pl script with the machines list as a parameter

- Wait for the BackupKVMWrapper.pl script to finish

- Go again through all machines and update the backup subtree a last time

- Check if the backup was successful, if yes, set sstProvisioningMode = finished (see also TBD)